About Authors:

Krunal Parikh1*, Maheshkumar Kataria2

1 M.Pharm, Quality Assurance,

2Assistant professor, Department of pharmaceutics,

Seth G.L. Bihani S.D. College of Technical Education,

Institute of Pharmaceutical Sciences and Drug Research,

Sri Ganganagar, Rajasthan, INDIA

*Krunal_2922@yahoo.in

ABSTRACT

The Quality System regulation requires that “when computers or automated data processing systems are used as part of production or the quality system, the manufacturer shall validate computer software for its intended use according to an established protocol.” This has been a regulatory requirement for GMP since 1978. In addition to the above validation requirement, computer systems that implement part of a regulated manufacturer’s production processes or quality system (or that are used to create and maintain records required by any other FDA regulation) are subject to the Electronic Records, Electronic Signatures regulation. This regulation establishes additional security, data integrity, and validation requirements when records are created or maintained electronically. These additional Part 11 requirements should be carefully considered and included in system requirements and software requirements for any automated record keeping systems.

[adsense:336x280:8701650588]

Reference Id: PHARMATUTOR-ART- 1454

INTRODUCTION

The concept of validation was developed in the 1970s and is widely credited to Ted Byers who was then Associate Director of Compliance at the U.S. FDA. The concept was focused on: “Establishing documented evidence which provides a high degree of assurance that a specific process will consistently produce a product meeting its predetermined specifications and quality attributes”.1

Throughout the 1980s, computer systems validation was debated primarily in the U.S.A. Ken Chapman published a paper covering this period during which the FDA gave advice on the following GMP issues:

· Input/output checking

· Batch records

· Applying GMP to hardware and software

· Supplier responsibility

· Application software inspection

· FDA investigation of computer systems

· Software development activities

Computer systems validation also became a high profile industry issue in Europe in 1991 when several European manufacturers and products were temporarily banned from the U.S.A. for computer systems noncompliance. The computer systems in question included autoclave PLCs and SCADA systems. The position of the FDA was clear; the manufacturer had failed to satisfy their “concerns” that computer systems should:

· Perform accurately and reliably

· Be secure from unauthorized or inadvertent changes

· Provide for adequate documentation of the process

Meanwhile two experienced GMP regulatory inspectors, Ron Tetzlaff and Tony Trill, published papers respectively presenting the FDA’s and U.K.’s MCA inspection practice for computer systems. These papers presented a comprehensive perspective on the current validation expectations of GMP regulatory authorities.

Topics covered included:

· Life-cycle approach

· Quality management

· Procedures

· Training

· Validation protocols

· Qualification evidence\

· Change control

· Audit trail

· Ongoing evaluation

[adsense:468x15:2204050025]

The Quality System regulation requires that “when computers or automated data processing systems are used as part of production or the quality system, the manufacturer shall validate computer software for its intended use according to an established protocol.” This has been a regulatory requirement for GMP since 1978. In addition to the above validation requirement, computer systems that implement part of a regulated manufacturer’s production processes or quality system (or that are used to create and maintain records required by any other FDA regulation) are subject to the Electronic Records, Electronic Signatures regulation. This regulation establishes additional security, data integrity, and validation requirements when records are created or maintained electronically. These additional Part 11 requirements should be carefully considered and included in system requirements and software requirements for any automated record keeping systems. System validation and software validation should demonstrate that all Part 11 requirements have been met. Computers and automated equipment are used extensively throughout Pharmaceutical, Biotech, Medical Device, and Medical Gas industries in areas such as design, laboratory testing and analysis, product inspection and acceptance, production and process control, environmental controls, packaging, labelling traceability, document control, complaint management, and many other aspects of the quality system. Increasingly, automated plant floor operations have involved extensive use of embedded systems in

· PLCs

· digital function controllers

· statistical process control

· supervisory control and data acquisition

· robotics

· human–machine interfaces

· input/output devices

· computer operating systems

There are basically two types of computers, analog and digital. The analog computer does not compute directly with numbers. It accepts electrical signals of varying magnitude (analog signals) which in practical use are analogous to or represent some continuous physical magnitude such as pressure, temperature, etc. Analog computers are sometimes used for scientific, engineering and process-control purposes. In the majority of industry applications used today, analog values are converted to digital form by an analogto- digital converter and processed by digital computers. The digital computer is the general use computer used for manipulating symbolic information. In most applications the symbols manipulated are numbers and the operations performed on the symbols are the standard arithmetical operations. Complex problem solving is achieved by basic operations of addition, subtraction, multiplication and division. A digital computer is designed to accept and store instructions (program), accept information (data) and process the data as specified in the program and display the results of the processing in a selected manner. Instructions and data are in coded form the computer is designed to accept. The computer performs automatically and in sequence according to the program.1

For the EC Guide to Good Manufacturing Practice for Medicinal Products, Annex 11 [2] identifies the following requirements that need to be addressed for computerized system application:

· GMP risk assessment

· Qualified/trained resource

· System life-cycle validation

· System environment

· Current specifications

· Software quality assurance

· Formal testing/acceptance

· Data entry authorization

· Data plausibility checks

· Communication diagnostics

· Access security

· Batch release authority

· Formal procedures/contracts

· Change control

· Electronic data hardcopy

· Secure data storage

· Contingency/recovery plans

· Maintenance plans/records

Systems elements in computer validation which need to be considered are:

· Hardware (equipment)

· Software(procedures)

· People(users)

The purpose of computer system validation is to ensure an acceptable degree of evidence, confidence, intended use, accuracy, consistency and reliability.5

NOW YOU CAN ALSO PUBLISH YOUR ARTICLE ONLINE.

SUBMIT YOUR ARTICLE/PROJECT AT articles@pharmatutor.org

Subscribe to PharmaTutor Alerts by Email

FIND OUT MORE ARTICLES AT OUR DATABASE

COMPUTERIZED SYSTEM VALIDATION QUALITY SYSTEM

The validation of a computerized control system to FDA requirements can be broken down into a number of phases which are interlinked with the overall project program. A typical validation program for a control system also includes the parallel design and development of control and monitoring instrumentation. The validation of a computer system involves four fundamental tasks. Defining and adhering to a validation plan to control the application andsystem operation, including GMP risk and validation rationale Documenting the validation life-cycle steps to provide evidence of system accuracy, reliability, repeatability, and data integrityConducting and reporting the qualification testing required to achieve validation statusUndertaking periodic reviews throughout the operational life of the system to demonstrate that validation status is maintained Other key considerations include the following:

Traceability and accountability of information to be maintained throughout validation life-cycle documents (particularly important in relating qualification tests to defined requirements). The mechanism (e.g., matrix) or establishing and maintaining requirements traceability should document where a user-specified requirement is met by more than one system function or covered by multiple tests All qualification activities must be performed in accordance with predefined protocols/test procedures that must generate sufficient approved documentation to meet the stated acceptance criteria. Provision of an incident log to record any test deviations during qualification and any system discrepancies, errors, or failures during operational use, and to manage the resolution of such issues A typical Quality System includes the following phases.1

* Definition phase

* Commissioning and in-place qualification phase

* Ongoing maintenance phase

Definition Phase

Validation starts at the definition (conceptual design) phase because the FDA expects to see documentary evidence that the chosen system vendor and the software proposed meets the customer ’s predefined selection criteria. Vendor acceptance criteria, which must be defined by the customer, should typically include the following.

The Vendor’s Business Practices

· Vendor certification to an approved QA standard. Certification may be a consideration when selecting a systems vendor. Initiative which promotes the use of international standards to improve the quality management of software development shall be considered.

· Vendor Audit by the customer to ensure company standards and practices are known and are being followed.

· Vendor end user support agreements.

· Vendor financial stability.

· Biography for the vendor ’s proposed project personnel (interviews also

should be considered).

· Checking customer references and visiting their sites should be considered.

The Vendor’s Software Practices

· Software development methodology

· Vendor’s experience in using the project software including: operating system software; application software; “off-the-shelf” and support software package (e.g., archiving, networking, batch software).

· Software performance and development history

· Software updates

· The vendor must make provision for source code to be accessible to the end user (e.g., have an escrow or similar agreement) and should provide a statement to this effect. Escrow is the name given to a legally binding agreement between a supplier and a customer which permits the customer access to source code, which is stored by a third party organization. The agreement also permits the customer access to the source code should the supplier become bankrupt. Vendor acceptance can be divided into these areas:

· Vendor prequalification (to select suitable vendors to receive the Tender enquiry package)

· Review of the returned Tenders

· Audit of the most suitable vendor(s)

Other documentation produced during the definition phase includes the URS, standard specifications and Tender support documentation. The Tender enquiry package must be reviewed by the customer prior to issue to selected vendors. This review, called SQ, is carried out to ensure that the customer ’s technical and quality requirements are fully addressed.

System Development Phase

The system development phase is the period from Tender award to delivery of the control system to site. It can be subdivided into four sub phases:

· Design agreement

· Design and development

· Development testing

· Predelivery or FAT

The design agreement phase comprises the development and approval of the system vendor’s Functional Design Specification, its associated FAT, Specification and the Quality Plan for the project. These form the basis of the contractual agreement between the system vendor and the customer. The design and development phase involves the development and approval of the detailed system (hardware and software) design and testing specifications.

The software specifications comprise the Software Design Specification and its associated Software Module Coding. The hardware specifications comprise the Computer Hardware Design Specification and its associated Hardware Test Specification and Computer Hardware Production.

The development testing phase comprises the structured testing of the hardware and software against the detailed design specifications starting from the lowest level and working up to a fully integrated system. The systems vendor must follow a rigorous and fully documented testing regime to ensure that each item of hardware and software module developed or modified performs the function(s) required without degrading other modules or the systems as a whole.

The predelivery acceptance phase comprises the FAT, which is witnessed by the customer, and the DQ review by the customer to ensure the system design meets technical (system functionality and operability) and quality (auditable, structured documentation) objectives.

Throughout the system development phase, the systems vendor should be subject to a number of quality audits by the customer, or their nominated agents, to ensure that the Quality Plan for the project is being complied with and that all documentation is being completed correctly. In addition, the vendor should conduct internal audits, and the reports should be available for inspection by the customer. The systems vendor also must enforce a strict change control procedure to enable all mediations and changes to the system to be thoroughly designed, tested, and documented. Change control is a formal system by which qualified representatives of appropriate disciplines review proposed or actual changes that might affect a validated status. The intent is to determine the need for action that would ensure and document that the component or system is maintained in a validated state. The audit trail documentation introduced and maintained by the Quality Plan and the test documentation can be used as evidence by the customer during the FDA’s inspections that the system meets the functionality required. In particular, the test and change control documentation will demonstrate a positive, thorough, and professional approach to validation.

Commissioning and In-PlaceQualification Phase

The commissioning and qualification phase encompasses the System Commissioning on site, Site Acceptance Testing, IQ, OQ, and, where applicable, PQ activities for the project. The most important part of this phase must be identified as qualification based on system specification documentation. The system installation and operation must be confirmed against its documents. All system adjustments and changes occuring in this phase must result in updating of the corresponding specification document. It is an assurance when building a reliable system base document in support of a life cycle approach during a phase that most last minute changes are discovered. No benefit of any life cycle approach can be obtained when the system and its documentation do not match after completion of this phase.

Ongoing Maintenance Phase

The term maintenance does not mean the same when applied to hardware and software. The operational maintenance of hardware and software are different because their failure/error mechanisms are different. Hardware maintenance typically includes preventive hardware maintenance actions, component replacement, and corrective changes. Software maintenance includes corrective, perfective, and adaptive maintenance but does not include preventive maintenance actions or software component replacement.

Changes made to correct errors and faults in the software are corrective maintenance. Changes made to the software to improve the performance, maintainability, or other attributes of the software system are perfective maintenance. Software changes to make the software system usable in a changed environment are adaptive maintenance. When changes are made to a software system, sufficient regression analysis and testing should be conducted to demonstrate that portions of the software not involved in the change were not adversely impacted. This is in addition to testing that evaluates the correctness of the implemented change(s).

The specific validation effort necessary for each change is determined by the type of change, the development products affected, and the impact of those products on the operation of the system. All proposed modifications, enhancements, or additions to the system should be assessed to determine the effect each change would have on the entire system. This information should determine the extent to which verification and/or validation tasks need to be iterated.

Documentation should be carefully reviewed to determine which documents have been impacted by a change. All approved documents (e.g., specifications, user manuals, drawings, etc.) that have been affected should be updated in accordance with the applicable site or corporate change management procedures. Specifications should be updated before any change is implanted.1

Existing System Validation

For retrospective validation, emphasis is put on the assembly of appropriate historical records for system definition, controls, and testing. Existing systems that are not well documented and do not demonstrate change control and/or do not have approved test records cannot be considered as candidates for retrospective validation as defined by the regulatory authorities.

Consequently, for a system that is in operational use and does not meet the criteria for retrospective validation, the approach should be to establish documented evidence that the system does what it purports to do. To do this, an initial assessment is required to determine the extent of documented records that exist. Existing documents should be collected, formally reviewed, and kept in a system “history file” for reference and to establish the baseline for the validation exercise. From the document gap analysis the level of redocumenting and retesting that is necessary can be identified and planned.4,7

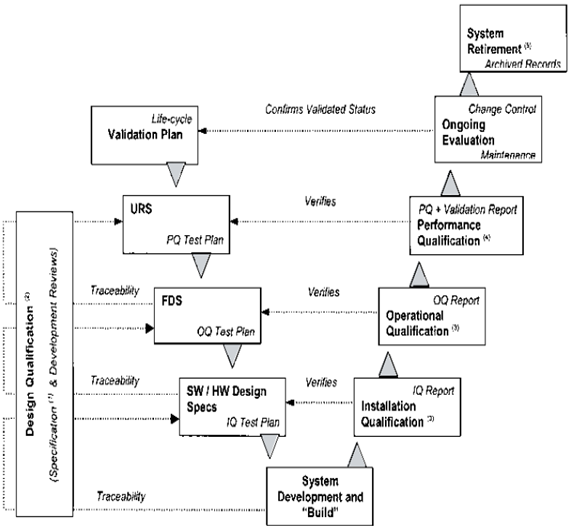

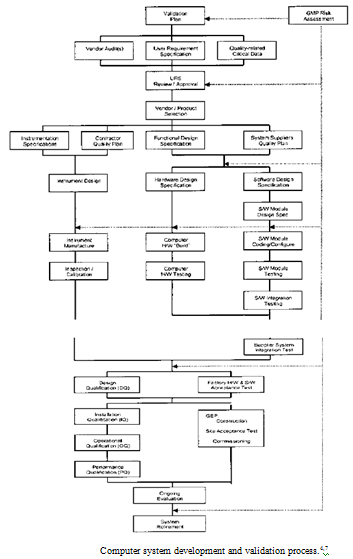

Framework for system validation.4,7

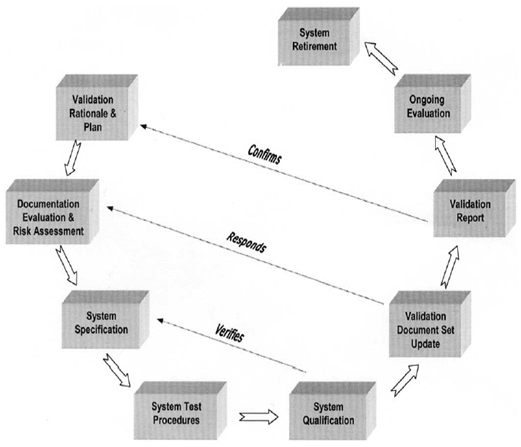

A validation cycle for existing systems.4,7

SOFTWARE VALIDATION

The Quality System regulation treats “verification” and “validation” as separate and distinct terms. On the other hand, many software engineering journal articles and textbooks use the terms verification and validation interchangeably, or in some cases refer to software “verification, validation, and testing (VV&T)” as if it is a single concept, with no distinction among the three terms.

Software verification provides objective evidence that the design outputs of a particular phase of the software development life cycle meet all of the specified requirements for that phase. Software verification looks for consistency, completeness, and correctness of the software and its supporting documentation, as it is being developed, and provides support for a subsequent conclusion that software is validated. Software testing is one of many verification activities intended to confirm that software development output meets its input requirements. Other verification activities include various static and dynamic analyses, code and document inspections, walkthroughs, and other techniques.

Software validation is a part of the design validation for the project, but is not separately defined in the Quality System regulation. FDA considers software validation to be “confirmation by examination and provision of objective evidence that software specifications conform to user needs and intended uses, and that the particular requirements implemented through software can be consistently fulfilled.” In practice, software validation activities may occur both during as well as at the end of the software development life cycle to ensure that all requirements have been fulfilled. Since software is usually part of a larger hardware system, the validation of software typically includes evidence that all software requirements have been implemented correctly and completely and are traceable to system requirements. A conclusion that software is validated is highly dependent upon comprehensive software testing, inspections, analyses, and other verification tasks performed at each stage of the software development life cycle.

Software verification and validation are difficult in nature because a developer cannot test forever, and it is hard to know how much evidence is enough. In large measure, software validation is a matter of developing a “level of confidence” that the application meets all requirements and user expectations for the software automated functions. Measures such as defects found in specifications documents, estimates of defects remaining, testing coverage, and other techniques are all used to develop an acceptable level of confidence before shipping the product. The level of confidence, and therefore the level of software validation, verification, and testing effort needed, will vary depending upon the application. Many firms have asked for specific guidance on what the FDA expects them to do to ensure compliance with the Quality System regulation with regard to software validation. Validation of software has been conducted in many segments of the software industry for almost three decades. Due to the great variety of pharmaceuticals, medical devices, processes, and manufacturing facilities, it is not possible to state in one document all of the specific validation elements that are applicable. However, a general application of several broad concepts can be used successfully as guidance for software validation. These broad concepts provide an acceptable framework for building a comprehensive approach to software validation. Requirements SpecificationWhile the Quality System regulation states that design input requirements must be documented, and that specified requirements must be verified, the regulation does not further clarify the distinction between the terms “requirement” and “specification.” A requirement can be any need or expectation for a system or for its software. Requirements reflect the stated or implied needs of the customer, and may be market-based, contractual, or statutory, as well as an organization’s internal requirements. There can be many different kinds of requirements (e.g., design, functional, implementation, interface, performance, or physical requirements). Software requirements are typically derived from the system requirements for those aspects of system functionality that have been allocated to software. Software requirements are typically stated in functional terms and are defined, refined, and updated as a development project progresses. Success in accurately and completely documenting software requirements is a crucial factor in successful validation of the resulting software.

A specification is defined as “a document that states requirements.” It may refer to or include drawings, patterns, or other relevant documents and usually indicates the means and the criteria whereby conformity with the requirement can be checked. There are many different kinds of written specifications, e.g., system requirements specification, software requirements specification, software design specification, software test specification, software integration specification, etc. All of these documents establish “specified requirements” and are design outputs for which various forms of verification are necessary.

1. A documented software requirements specification provides a baseline for both validation and verification. The software validation process cannot be completed without an established software requirements specification.1,3

NOW YOU CAN ALSO PUBLISH YOUR ARTICLE ONLINE.

SUBMIT YOUR ARTICLE/PROJECT AT articles@pharmatutor.org

Subscribe to PharmaTutor Alerts by Email

FIND OUT MORE ARTICLES AT OUR DATABASE

Defect Prevention

Software quality assurance needs to focus on preventing the introduction of defects into the software development process and not on trying to “test quality into” the software code after it is written. Software testing is very limited in its ability to surface all latent defects in software code. For example, the complexity of most software prevents it from being exhaustively tested. Software testing is a necessary activity. However, in most cases software testing by itself is not sufficient to establish confidence that the software is fit for its intended use. In order to establish that confidence, software developers should use a mixture of methods and techniques to prevent software errors and to detect software errors that do occur. The “best mix” of methods depends on many factors including the development environment, application, size of project, language, and risk.

Time and Effort

To build a case that the software is validated requires time and effort. Preparation for software validation should begin early, i.e., during design and development planning and design input. The final conclusion that the software is validated should be based on evidence collected from planned efforts conducted throughout the software life cycle.

Software Life Cycle

Software validation takes place within the environment of an established software life cycle. The software life cycle contains software engineering tasks and documentation necessary to support the software validation effort.

In addition, the software life cycle contains specific verification and validation tasks that are appropriate for the intended use of the software. No one life cycle model can be recommended for all software development and validation project, but an appropriate and practical software life cycle should be selected and used for a software development project.

Plans

The software validation process is defined and controlled through the use of a plan. The software validation plan defines “what” is to be accomplished through the software validation effort. Software validation plans are a significant quality system tool. Software validation plans specify areas such as scope, approach, resources, schedules and the types and extent of activities, tasks, and work items.

Procedures

The software validation process is executed through the use of procedures. These procedures establish “how” to conduct the software validation effort. The procedures should identify the specific actions or sequence of actions that must be taken to complete individual validation activities, tasks, and work items.

Software Validation After a Change

Due to the complexity of software, a seemingly small local change may have a significant global system impact. When any change (even a small change) is made to the software, the validation status of the software needs to be re-established. Whenever software is changed, a validation analysis should be conducted not just for validation of the individual change but also to determine the extent and impact of that change on the entire software system. Based on this analysis, the software developer should then conduct an appropriate level of software regression testing to show that unchanged but vulnerable portions of the system have not been adversely affected. Design controls and appropriate regression testing provide the confidence that the software is validated after a software change.

Validation Coverage

Validation coverage should be based on the software’s complexity and safety risk and not on firm size or resource constraints. The selection of validation activities, tasks, and work items should be commensurate with the complexity of the software design and the risk associated with the use of the software for the specified intended use. For lower risk applications, only baseline validation activities may be conducted. As the risk increases, additional validation activities should be added to cover the additional risk. Validation documentation should be sufficient to demonstrate that all software validation plans and procedures have been completed successfully.

Flexibility and Responsibility

Specific implementation of these software validation principles may be quite different from one application to another. The manufacturer has flexibility in choosing how to apply these validation principles, but retains ultimate responsibility for demonstrating that the software has been validated. Software is designed, developed, validated, and regulated in a wide spectrum of environments, and for a wide variety of applications with varying levels of risk.

In each environment, software components from many sources may be used to create the software (e.g., in-house developed software, off-the-shelf software, contract software,shareware). In addition, software components come in many different forms (e.g., application software, operating systems, compilers, debuggers, configuration management tools, and many more). The validation of software in these environments can be a complex undertaking; therefore, it is appropriate that all of these software validation principles be considered when designing the software validation process. The resultant software validation process should be commensurate with the safety risk associated with the system, device, or process.

Software validation activities and tasks may be dispersed, occurring at different locations and being conducted by different organizations. However, regardless of the distribution of tasks, contractual relations, source of components, or the development environment, the manufacturer retains ultimate responsibility for ensuring that the software is validated. Software validation is accomplished through a series of activities and tasks that are planned and executed at various stages of the software development life cycle. These tasks may be one-time occurrences or may be iterated many times, depending on the life cycle model used and the scope of changes made as the software project progresses.1

SOFTWARE LIFE CYCLE ACTIVITIES

Software developers should establish a software life cycle model that is appropriate for their product and organization. The software life cycle model that is selected should cover the software from its birth to its retirement. Activities in a typical software life cycle model include the following:

· Quality Planning

· System Requirements Definition

· Detailed Software Requirements Specification

· Software Design Specification

· Construction or Coding

· Testing

· Installation

· Operation and Support

· Maintenance

· Retirement

Verification, testing and other tasks that support software validation occur during each of the above activities. A life cycle model organizes these software development activities in various ways and provides a framework for monitoring and controlling the software development project.

1. For each of the software life cycle activities, there are certain “typical” tasks that support conclusion that the software is validated. However, the specific tasks to be performed, their order of performance, and the iteration and timing of their performance will be dictated by the specific software life cycle model that is selected and the safety risk associated with the software application. For very low risk applications, certain tasks may not be needed at all. However, the software developer should at least consider each of these tasks and should define and document which tasks are or are not appropriate for their specific application.5

Quality Planning

Design and development planning should culminate in a plan that identifies necessary tasks, procedures for anomaly reporting and resolution, necessary resources, and management review requirements, including formal design reviews. A software life cycle model and associated activities should be identified, as well as those tasks necessary for each software life cycle activity. The plan should include:

· The specific tasks for each life cycle activity

· Enumeration of important quality factors

· Methods and procedures for each task

· Task acceptance criteria

· Criteria for defining and documenting outputs in terms that will allow evaluation of their conformance to input requirements

· Inputs for each tas

· Outputs from each task

· Roles, resources, and responsibilities for each task

· Risks and assumptions

· Documentation of user needs Management must identify and provide the appropriate software development environment and resources. Typically, each task requires personnel as well as physical resources.

The plan should identify the personnel, the facility and equipment resources for each task, and the role that risk (hazard) management will play. A configuration management plan should be developed that will guide and control multiple parallel development activities and ensure proper communications and documentation. Controls are necessary to ensure positive and correct correspondence among all approved versions of the specifications documents, source code, object code, and test suites that comprise a software system. The controls also should ensure accurate identification of, and access to, the currently approved versions.

Procedures should be created for reporting and resolving software anomalies found through validation or other activities. Management should identify the reports and specify the contents, format, and responsible organizational elements for each report. Procedures also are necessary for the review and approval of software development results, including the responsible organizational elements for such reviews and approvals.

Requirements

Requirement development includes the identification, analysis, and documentation of information about the application and its intended use. Areas of special importance include allocation of system functions to hardware/software, operating conditions, user characteristics, potential hazards, and anticipated tasks. In addition, the requirements should state clearly the intended use of the software. The software requirements specification document should contain a written definition of the software functions.

It is not possible to validate software without predetermined and documented software requirements.

Typical software requirements specify the following:

· All software system inputs

· All software system outputs

· All functions that the software system will perform

· All performance requirements that the software will meet

· The definition of all external and user interfaces, as well as any internal software-to-system interfaces

· How users will interact with the system

· What constitutes an error and how errors should be handled

· Required response times

· The intended operating environment

· All ranges, limits, defaults, and specific values that the software will accept

· All safety related requirements, specifications, features, or functions that will be implemented in software

Software safety requirements are derived from a technical risk management process that is closely integrated with the system requirements development process. Software requirement specifications should identify clearly the potential hazards that can result from a software failure in the system as well as any safety requirements to be implemented in software. The consequences of software failure should be evaluated, along with means of mitigating such failures (e.g., hardware mitigation, defensive programming, etc.). From this analysis, it should be possible to identify the most appropriate measures necessary to prevent harm. A software requirements traceability analysis should be conducted to trace software requirements to (and from) system requirements and to risk analysis results. In addition to any other analyses and documentation used to verify software requirements, a formal design review is recommended to confirm that requirements are fully specified and appropriate before extensive software design efforts begin. Requirements can be approved and released incrementally, but care should be taken that interactions and interfaces among software (and hardware) requirements are properly reviewed, analyzed, and controlled.

Design

The decision to implement system functionality using software is one that is typically made during system design. Software requirements are typically derived from the overall system requirements and design for those aspects in the system that are to be implemented using software. There are user needs and intended uses for a finished product, but users typically do not specify whether those requirements are to be met by hardware, software, or some combination of both. Therefore, software validation must be considered within the context of the overall design validation for the system. A documented requirements specification represents the user’s needs and intended uses from which the product is developed. A primary goal of software validation is to then demonstrate that all completed software products comply with all documented software and system requirements. The correctness and completeness of both the system requirements and the software requirements should be addressed as part of the design validation process for that application. Software validation includes confirmation of conformance to all software specifications and confirmation that all software requirements are traceable to the system specifications.6

Confirmation is an important part of the overall design validation to ensure that all aspects of the design conform to user needs and intended uses. In the design process, the software requirements specification is translated into a logical and physical representation of the software to be implemented. The software design specification is a description of what the software should do and how it should do it. Due to complexity of the project or to enable persons with varying levels of technical responsibilities to clearly understand design information, the design specification may contain both a high-level summary of the design and detailed design information. The completed software design specification constrains the programmer/coder to stay within the intent of the agreed upon requirements and design. A complete software design specification will relieve the programmer from the need to make ad hoc design decisions.

The software design needs to address human factors. Use error caused by designs that are either overly complex or contrary to users’ intuitive expectations for operation is one of the most persistent and critical problems encountered by the FDA. Frequently, the design of the software is a factor in such use errors.

Human factor engineering should be woven into the entire design and development process, including the design requirements, analysis, and tests. Safety and usability issues should be considered when developing flow charts, state diagrams, prototyping tools, and test plans. Also, task and function analysis, risk analysis, prototype tests and reviews, and full usability tests should be performed. Participants from the user population should be included when applying these methodologies.

The software design specification should include:

· Software requirements specification, including predetermined criteria for acceptance of the software

· Software risk analysis

· Development procedures and coding guidelines (or other programming procedures)

· Systems documentation (e.g., a narrative or a context diagram) that describes the systems context in which the program is intended to function, including the relationship of hardware, software, and the physical environment

· Hardware to be used

· Parameters to be measured or recorded

· Logical structure (including control logic) and logical processing steps (e.g., algorithms)

· Data structures and data flow diagrams

· Definitions of variables (control and data) and description of where they are used

· Error, alarm, and warning messages

· Supporting software (e.g., operating systems, drivers, other application software)

· Communication links (links among internal modules of the software, links with the supporting software, links with the hardware, and links with the user)

· Security measures (both physical and logical security)

The activities that occur during software design have several purposes. Software design evaluations are conducted to determine if the design is complete, correct, consistent, unambiguous, feasible, and maintainable. Appropriate consideration of software architecture (e.g., modular structure) during design can reduce the magnitude of future validation efforts when software changes are needed. Software design evaluations may include analysis of control flow, data flow, complexity, timing, sizing, memory allocation, criticality analysis, and many other aspects of the design. A traceability analysis should be conducted to verify that the software design implements all of the software requirements. As a technique for identifying where requirements are not sufficient, the traceability analysis should also verify that all aspects of the design are traceable to software requirements. An analysis of communication links should be conducted to evaluate the proposed design with respect to hardware, user, and related software requirements. The software risk analysis should be re-examined to determine whether any additional hazards have been identified and whether any new hazards have been introduced by the design. At the end of the software design activity, a Formal Design Review should be conducted to verify that the design is correct, consistent, complete, accurate, and testable before moving to implement the design. Portions of the design can be approved and released incrementally for implementation, but care should be taken that interactions and communication links among various elements are properly reviewed, analyzed, and controlled.

Most software development models will be iterative. This is likely to result in several versions of both the software requirements specification and the software design specification. All approved versions should be archived and controlled in accordance with established configuration management procedures.

Construction or Coding

Software may be constructed either by coding (i.e., programming) or by assembling together previously coded software components (e.g., from code libraries, off the- shelf software, etc.) for use in a new application.

Coding is the software activity where the detailed design specification is implemented as source code. Coding is the lowest level of abstraction for the software development process. It is the last stage in decomposition of the software requirements where module specifications are translated into a programming language. Coding usually involves the use of a high-level programming language, but may also entail the use of assembly language (or microcode) for time-critical operations. The source code may be either compiled or interpreted for use on a target hardware platform.

Decisions on the selection of programming languages and software build tools (assemblers, linkers, and compilers) should include consideration of the impact on subsequent quality evaluation tasks (e.g., availability of debugging and testing tools for the chosen language).

Some compilers offer optional levels and commands for error checking to assist in debugging the code. Different levels of error checking may be used throughout the coding process, and warnings or other messages from the compiler may or may not be recorded. However, at the end of the coding and debugging process, the most rigorous level of error checking is normally used to document what compilation errors still remain in the software. If the most rigorous level of error checking is not used for final translation of the source code, then justification for use of the less rigorous translation error checking should be documented. Also, for the final compilation, there should be documentation of the compilation process and its outcome, including any warnings or other messages from the compiler and their resolution, or justification for the decision to leave issues unresolved. Firms frequently adopt specific coding guidelines that establish quality policies and procedures related to the software coding process. Source code should be evaluated to verify its compliance with specified coding guidelines. Such guidelines should include coding conventions regarding clarity, style, complexity management, and commenting. Code comments should provide useful and descriptive information for a module, including expected inputs and outputs, variables referenced, expected data types, and operations to be performed. Source code should also be evaluated to verify its compliance with the corresponding detailed design specification. Modules ready for integration and test should have documentation of compliance with coding guidelines and any other applicable quality policies and procedures.

Source code evaluations are often implemented as code inspections and code walkthroughs. Such static analyses provide a very effective means to detect errors before execution of the code. They allow for examination of each error in isolation and can also help in focusing later dynamic testing of the software. Firms may use manual (desk) checking with appropriate controls to ensure consistency and independence. Source code evaluations should be extended to verification of internal linkages between modules and layers (horizontal and vertical interfaces) and compliance with their design specifications. Documentation of the procedures used and the results of source code evaluations should be maintained as part of design verification.

Testing by the Software Developer

Software testing entails running software products under known conditions with defined inputs and documented outcomes that can be compared to their predefined expectations. It is a time-consuming, difficult, and imperfect activity. As such, it requires early planning in order to be effective and efficient. Test plans